![]() Safe Human Robot Interaction by Using Exteroceptive Sensing based Human Modeling

Safe Human Robot Interaction by Using Exteroceptive Sensing based Human Modeling

PI: Prof. S K Gupta

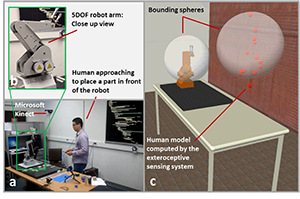

We have developed an exteroceptive sensing based framework to achieve safe human-robot interaction during shared tasks. Our approach allows a human to operate in close proximity with a robot, while pausing its motion whenever a collision between the human and the robot is imminent. An overview of the overall system is shown in Fig. 1. The human’s presence is sensed by an N-range sensor based system, which consists of multiple range sensors mounted at various points on the periphery of the work cell. Each range sensor, based on a Microsoft Kinect sensor, observes the human and outputs a 20-joint human model. Usage of multiple sensors takes care of occlusion problems. Based on the class of collaborative tasks considered in this work, the shape of the work volume is cylindrical by nature. Therefore, there is no need for a sensor to be placed directly above the robot. However, there is a need for multiple sensors to be placed radially surrounding the periphery of the work cell. Accordingly, we find that four sensors, mounted on the four corners of the work cell are sufficient to cover the work volume. Positional data of human models from all the sensors are fused together to generate a refined human model.

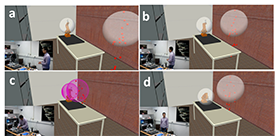

Next, the robot and the generated human model are approximated by dynamic bounding spheres that move in a 3D space as a function of the movements performed by the robot and human in real-time. We designed a pre-collision strategy that controls the robot motion by tracking the collisions between the two bounding spheres. For this purpose, a virtual simulation engine is developed based on Tundra software. A simulated robot, with a configuration identical to the physical robot, is built and instantiated within the virtual environment. The simulated robot replicates the motion of the physical robot in real-time by using the same motor commands that drive the physical robot. Similarly, a simulated human model replicates the motion of the refined human model generated by the sensing system. Now, as the robot and human move in a shared region during a collaborative task, any intersection between the two bounding spheres is detected as an imminent collision condition, which is used to pause the robot’s motion; a visual alarm (sphere changes color from white to red) and an audio alarm are raised to alert the human. The robot automatically resumes its task after the human moves into a safety zone.

Whereas most previous sensing methods relied on depth data from camera images to build a human model, our approach is one of the first successful attempts to build it directly from the skeletal output of multiple Kinects. Real-time behavior observed during experiments with a 5 DOF robot and a human safely performing shared assembly tasks validate our approach. An illustrative experimental result is shown in figure below.

Overall System Overview: (a) Work cell used to evaluate human-robot interaction during a shared task. (b) 5 DOF robot used for experiments. (c) Visualization of the interference between robot and the human.

(a) Human is far away from the robot. As the distance between the spheres is significant, robot performs its intended task. (b) Human carries a part toward the front of the robot, which still continues its task as the spheres are not intersecting. (c) An imminent collision is detected by the system; therefore, the robot is paused and a visual alarm is raised (bounding spheres change color). (d) Human returns to a safety zone; therefore, the robot resumes its motion.